The Forge

What is/how do I use the Forge?

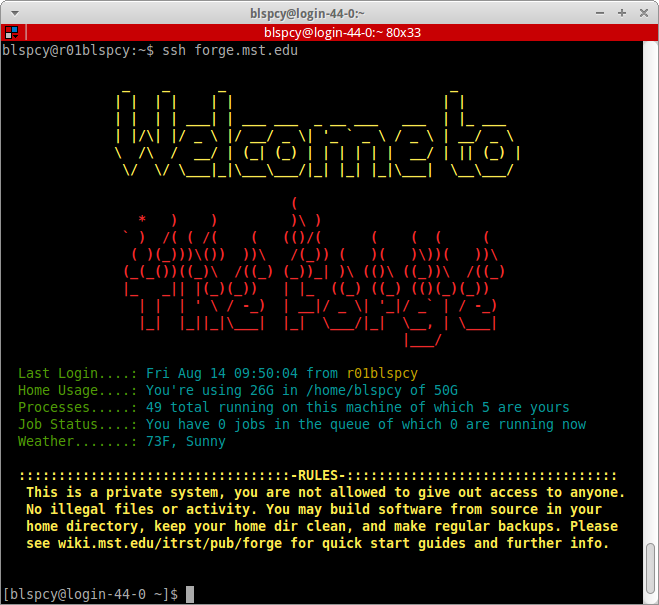

The Forge is a high-performance cluster computer. The Forge is available for S&T students without any additional cost to them. Students simply need to request access through the help desk and fill out the form which will be emailed to them after the request is made. New users can get going by visiting the Forge Quickstart guide.

The Forge is available to faculty members through two lease options. Faculty may either purchase non-priority CPU hours for a year, or priority access to a specific set of hardware for 3 years. Faculty should contact IT Research Support for more information.

Software

The Forge is built on CentOS 6.5 and utilizes SLURM as a scheduler and resource manager. The Forge has several compilers available through modules, such as the Intel and GNU Compiler Suites, as well as several commercial software titles available to campus users.

Hardware

In 2019 the Forge received added compute capacity along with dedicated high speed scratch space. While we have decommissioned many older systems to make room for this new hardware, the overall compute capability of the Forge has been increased from an estimated 120 TeraFLOPS to 180 TeraFLOPS. All Forge hardware is interconnected with FDR or EDR infiniband to allow for high speed data transfer, and low latency internode communication for message passing protocols. The Forge has two storage volumes available to all users. First being a 14 TB total capacity NFS share for home directory storage, and second being a global ceph storage solution providing 20TB of high speed scratch space for temporary files. Along with this globally available storage and compute capacity, we extend the ability for researchers to lease cluster attached storage if they find they need more, as well as priority leases on compute nodes which grant them preemptory access to compute nodes which are part of their lease.

How Much Does it cost?

General storage and compute access is free to students, staff, and faculty who are doing unfunded research, as well as NSF funded research grants. Free storage access is limited to 50GB of home directory space and 7 days of file retention on our 18TB scratch volume.

Priority or funded research compute access does have associated costs, these costs will vary some based on pricing negotiations with our hardware vendors at the time we purchase the hardware. The access is granted through a term based lease where the leasor has priority access to the node, or nodes they have leased. During the lease term, the leasor is also granted general access at the same priority as all other users. After the term of the lease expires the nodes which were part of the lease will become available to the general access pool.

Please see the following current standard estimates associated with priority access leases for 2019.

Where will my jobs run?

If you are a student your jobs can run on any compute node in the cluster, even the ones dedicated to researchers. However, if a researcher who has priority access to that dedicated node needs to use that node, then your job will stop and go back into the queue. You may prevent this potential interruption by specifying to run on just the general nodes in your job file. Please see the documentation on how to submit this request.

If you are a researcher who has purchased priority access for 3 years to an allocation of nodes you will have priority access to run on those nodes. If your job requires more processors than there are available for your nodes, your job will spill over to the general access nodes with the same priority as the student jobs. You may add users to your allocation so that your research group may also benefit from the priority access.

If you are a researcher who has purchased an allocation of CPU hours you will run on the general nodes at the same priority as the students. Your job will not run on any dedicated nodes and will not be susceptible to preemption by any other user. Once your job starts it will run until it fails, completes, or runs out of allocated time.

DETAILED GUIDE TO USING THE FORGE CLUSTER

(FORGE PUBLIC WIKI)

Version:1.0 StartHTML:0000000105 EndHTML:0000038900 StartFragment:0000037978 EndFragment:0000038860

In 2019 the Forge has received more added capacity with 28 new servers being added to the total number of nodes. While we have decommissioned many systems with older hardware as part of integrating this new hardware, the overall compute capability of the Forge has been increased from an estimated 120 TeraFlops to 180 TeraFlops. All Forge hardware is interconnected with FDR or EDR infiniband to allow for high speed data transfer, and low latency internode communication for message passing protocols.